On the possibility of obfuscating code using neural networks

In this blog post, I will cover the current state of my research investigating the possibility of using neural networks to hide shellcode. But before we dig in, I will provide a little background information.

For those unfamiliar with neural networks, they are a type of computer system design that is inspired by how human brains and nervous systems function. Neural networks are radically different from the typical computer system you use day in and day out, which have a central processor, a memory unit, mass storage system, and inputs and outputs. These typical computer systems process instructions sequentially in the central processor.

By comparison, a neural network distributes its processing in parallel across its neurons. Conveniently, obfuscation is an inherent attribute of neural networks. Their incomprehensibility is generally considered a drawback, as engineers and scientists lack tools and techniques to formally verify how a neural network will behave in all situations. Those of us interested in hiding control logic and data however can find good use for this 'black box' attribute of neural networks.

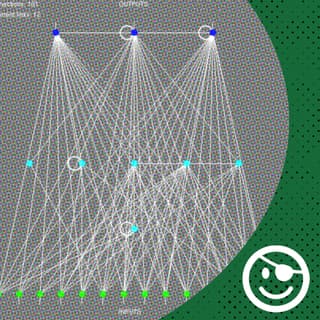

Above is a simple neural network. The neurons themselves are the colored circles, with input neurons at the bottom and output neurons on the top. The links between the neurons carry the “signal” from one neuron to another. If you think of a neural network as a virtual machine, the neurons and the links between them represent the hardware of the neural network computer system. The weights of the links between neurons and the activation responses of the neurons can be thought of as the software of the system. The weight of a link determines how much influence its input has on the activation response of the neuron into which it feeds. This video, 'Explained In A Minute: Neural Networks,' does an excellent job of illustrating the gist of neural networks: https://youtu.be/rEDzUT3ymw4.

The idea for this project was inspired by a research paper that discussed breaking automated software analysis techniques by obfuscating program control flow in neural networks ('Control Flow Obfuscation Using Neural Network to Fight Concolic Testing,' Ma, Ma, Liu, Huang, Gao, and Jia, 2014. In this research, scientists were able to conceal the trigger control logic and behavior of their program from analysis by training the neural networks to simulate the conditional behaviors of the program—pretty clever idea if you ask me.

I have been working to create a proof-of-concept encoder (Evolutionary Neural Network Encoder of Shenanigans (ENNEoS)) to place shellcode and the triggering control logic inside neural networks to determine if this is a promising technical approach to hide payloads and control logic from the prying eyes of reverse engineers. Neural networks are not programmed like typical computer systems, but they are 'trained' to provide a desired output or behavior for some particular input. As an example, we may want to train a neural network to output a specific shellcode if the correct input (e.g., password) is provided as an input.

The proof-of-concept encoder 'trains' the neural networks by using genetic algorithms to evolve the neural networks that solve the defined problem of outputting the correct shellcode for a given input. While this approach is not the most straightforward technique, it holds the possibility of creating recurrent neural networks that can use sequences of inputs as the triggering logic to release shellcode. A recurrent neural network can form short-term memory, which allows it to remember the previous input and use control logic requiring the correct sequence of inputs to release payloads. This ability would make brute-forcing the neural network to enumerate its possible outputs (i.e., payloads) significantly more difficult for reverse engineers.

To drive the genetic algorithm to solve the encoding problem, the encoder implements a 'fitness function.' A fitness function is an essential requirement to implementing an evolutionary process. Thinking back to the 'survival of the fittest' concept in evolution, it is the fitness function that defines what 'fittest' means within the encoder’s evolutionary process. While the underlying encoder software is rather complicated, conceptually, a fitness function is quite simple. A fitness function only needs to provide a score grading how 'good' or 'fit' a particular neural network performance is. The closer a neural network is to behaving the way you want (i.e., outputting the desired shellcode given the desired input), the higher the score it should receive from the fitness function. Provided with the fitness scores, the genetic algorithm will continue evolving the neural networks in its population until one matches the desired behavior.

The encoder loops through the genetic algorithm as seen in the following figure. The encoder uses a population size of 3,000. Each 'individual' in the population is a wrapper 'bot' around a unique neural network. The wrapper translates inputs (i.e., characters of the password) into a floating-point value that the neural network can accept as an input and translates the neural network output into a form usable by the loader (i.e., shellcode characters). Different execution threads test these neural networks by pushing inputs into them and recording their outputs. These inputs/outputs are then sent to the fitness function to measure how close they are to the desired behavior (i.e., dropping the correct payload). The main thread then pushes the fitness scores into the genetic algorithm, sets off the evolution process, and starts the next generation. Over time, the neural networks in the population will get closer and closer to solving the problem until a solution is found.

During my research, I found that evolving a single large neural network to encode a desired shellcode was impractical, as each additional character of shellcode greatly increased the encoding time. Breaking the shellcode into smaller chunks proved to be an effective performance improvement. This resulted in a large set of neural networks, all using the same control logic but outputting a different part (i.e., chunk) of the shellcode. A simple demonstration program loads the neural networks created by the encoder, provides them with inputs, and copies their outputs into executable memory. That memory is then executed and the payload is run.

The current status of the research project is that the technique has been proven to work. I have successfully encoded shellcode into the neural networks, pulled it back out again with the loader, and popped a shell with it. However, I would not yet call this a practical technique in its current state. I have created a second fitness function that I believe implements a practical encoder. This second proof-of-concept fitness function is driving the algorithm to create neural networks that output benign shellcode (i.e., a nopsled) when the input is not correct and drops a malicious payload (i.e., pop a shell) when the input is correct. This second fitness function appears to be working; however, the encoding time would be roughly two (2) to three (3) weeks on a high-end laptop. The current multi-threading design does not scale well beyond eight (8) threads. I have a tremendous amount of performance code debt that needs to be paid in the near term in order to push the project forward.

The next development steps are to refactor the encoder to greatly improve its multi-threaded efficiency. The current multi-threading was designed under the assumption that a single neural network would be evolved to solve the encoding problem. This is not what turned out to work in practice. Fortunately, the ongoing refactoring will greatly improve the speed of encoding, particularly on systems with large numbers of CPU cores, as each 'chunk' of the shellcode can be solved by an independent genetic algorithm. This will allow the performance to greatly scale up over multiple CPU cores. I am also working to create reverse engineering capture the flag (CTF)-style challenges with this technique, which will hopefully provide insight into how resistant to reverse engineering this type of payload encoding is.

There is also the possibility that my genetic algorithm approach to implementing an encoder will not scale out to a practical solution. I am starting to consider more traditional neural network training techniques to implement the encoder, which may prove to be more computationally efficient and provide a more practical approach.

The current ENNEoS project is implemented in C++ (apologies to the #pythonians) and can be found on my GitHub page (https://github.com/hoodoer/ENNEoS) if anyone wishes to take it for a spin. Feel free to contact me if you have any questions on the software or the technique. You can see a demo of the proof-of-concept at the end of my talk at CackalackyCon:

References:

https://ink.library.smu.edu.sg/cgi/viewcontent.cgi?article=3260&context=sis_research

https://github.com/hoodoer/ENNEoS

https://youtu.be/rEDzUT3ymw4

https://youtu.be/nTb6SBGaLR8